There’s a problem emerging in AI that most people haven’t noticed yet.

But you’ve probably felt it.

Every LinkedIn post sounds the same. Marketing copy has lost its edge. Even the “AI-generated” label is becoming unnecessary, you can just tell.

Now we know why.

Researchers at Cambridge and the Oxford Applied & Theoretical Machine Learning (OATML) group

identified a phenomenon called “model collapse”. When AI systems train on AI-generated content, they lose diversity and originality with each generation. The models don’t improve. They degrade.

And it’s not a future problem. It’s happening now.

The Feedback Loop Nobody Wanted

Here’s how it works:

AI generates content → That content floods the internet → AI trains on that content → Next generation AI becomes less diverse → Repeat.

Each cycle narrows the creative range. What starts as “competent but generic” becomes “identical and lifeless.”

The Nature journal published research in 2024 showing that after just five generations of training on AI output, language models experienced “irreversible defects” in their ability to generate diverse, original responses.

Think of it like photocopying a photocopy. Each generation loses fidelity.

The Evidence Is Everywhere

Google admitted the problem. Their March 2025 search algorithm update specifically targets “AI-generated content created primarily for search ranking” because it’s degrading search quality.

LinkedIn sees it in the data. Engagement on posts flagged as likely AI-generated dropped 34% year-over-year. Users are scrolling past the slop.

Marketing teams are backtracking. Companies that went “AI-first” in 2023-2024 are quietly bringing human writers back. Why? The content didn’t convert. It was invisible. Forgettable.

Even AI companies acknowledge it. OpenAI’s technical documentation now warns about “training data contamination” from synthetic content.

The term “AI slop” has entered mainstream business vocabulary. That should tell you something.

Why This Changes Everything

Most people think AI capabilities will keep improving exponentially.

But model collapse suggests a different trajectory: Without high-quality human-generated training data, AI gets worse, not better.

Which means human creativity isn’t just valuable despite AI.

It’s essential because of AI.

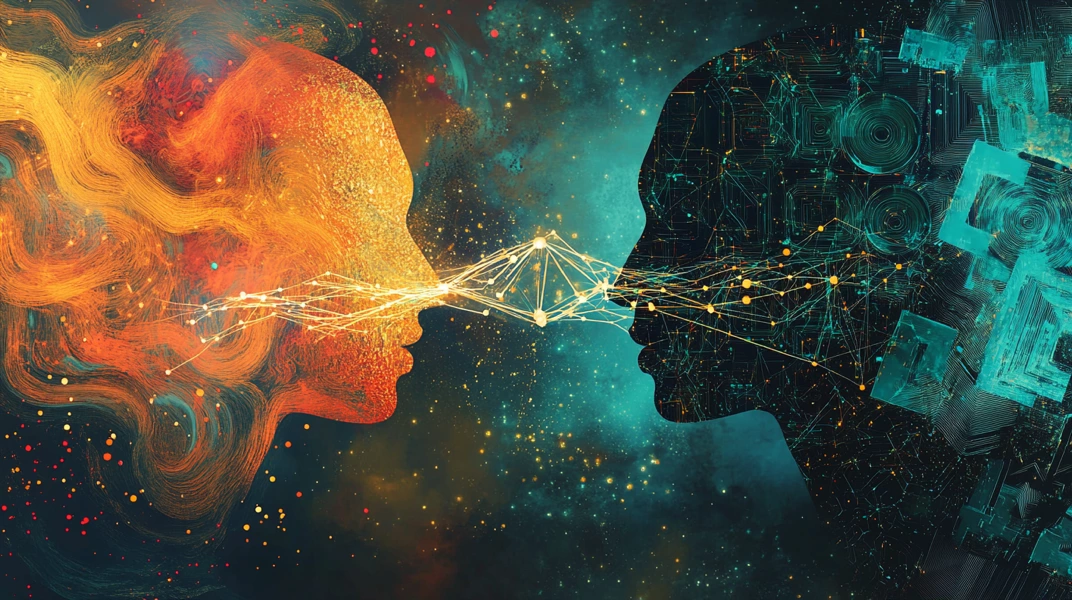

The AIs need us. They need original human thinking to maintain their quality. Without it, they spiral into increasingly narrow, repetitive patterns.

This flips the entire narrative.

We’re not in a race to keep up with AI.

We’re in a race to remain distinctly, detectably, irreplaceably human.

The Provably Human Premium

Here’s what’s already emerging in multiple industries:

Content creation: Clients now ask “Was this written by AI?” If yes, they pay less, or pass entirely.

Creative agencies: “Human-generated concepts, AI-assisted execution” is becoming standard positioning.

Journalism: Publications adding “reported and written by [human name]” prominently on articles.

Consulting: Firms emphasizing “proprietary frameworks based on human insight” versus “AI analysis.”

Being detectably human is becoming a competitive advantage.

Not because human work is slower. But because it’s different. Original. Unpredictable in ways that matter.

What Model Collapse Reveals

The research exposes something fundamental about how value will work in an AI-saturated world:

Garbage in, garbage out, at scale.

When AI trains on AI, you get convergent mediocrity. When AI amplifies human originality, you get genuine breakthroughs.

The difference? The human input.

Your curiosity that asks questions no algorithm would generate.

Your judgment that recognizes when AI is giving you probable instead of possible.

Your weird connections between seemingly unrelated ideas.

Your lived experience that no training data contains.

This isn’t romantic nostalgia for pre-AI work. It’s cold economic reality: Original human thinking is the only sustainable input that prevents model collapse.

The Two Paths Forward

As model collapse accelerates, workers are splitting into two categories:

The Commoditized: Using AI to produce more of the same. Faster, but identical to everyone else’s output. Competing on price as quality degrades.

The Amplified: Using AI to explore and develop ideas that start distinctly human. Slower to generate, but impossible to replicate. Commanding premium value.

One group is feeding the collapse.

The other is preventing it.

What This Means for Your Career

The professionals thriving five years from now won’t be the ones who learned to prompt AI best.

They’ll be the ones who maintained the creative capacities AI can’t replicate:

- Asking questions that don’t have answers yet

- Making connections that don’t exist in any dataset

- Bringing judgment that no model can automate

- Generating truly original starting points that give AI something worth amplifying

Model collapse proves that AI needs constant infusions of human originality to maintain quality.

Which means the most valuable skill isn’t using AI.

It’s being the human that AI needs.

The Counter-Intuitive Move

Everything in our culture pushes toward efficiency. Do more. Move faster. Automate everything.

But model collapse reveals why that’s exactly wrong.

The most valuable thing you can do is become more distinctly human while using AI to amplify what makes you distinct.

Not “human despite AI.”

Not “human instead of AI.”

Human as the essential input that prevents AI from collapsing into mediocrity.

Your weird wonderings. Your unexpected connections. Your lived experience. Your taste and judgment.

These aren’t luxuries in an AI world.

They’re the only renewable resource that maintains value.

The Skills That Scale

Here’s what protects you from model collapse:

Forensic curiosity: The ability to wonder about things that don’t show up in any dataset yet.

Critical judgment: Knowing when AI is giving you probable versus possible.

Serendipity engineering: Creating conditions where improbable connections become likely.

Authentic synthesis: Bringing your lived experience to shape how you guide AI.

These skills aren’t about working harder. They’re about thinking differently.

And they’re learnable.

The Choice

Model collapse will accelerate. More AI content will flood more channels. Quality will degrade further.

You can participate in the race to the bottom, generating more slop, faster.

Or you can position yourself as the human input that AI systems desperately need to maintain quality.

One path leads to commoditization.

The other leads to premium value that compounds over time.

The irony? AI itself is proving that human creativity isn’t obsolete.

It’s the only thing preventing AI from eating itself.

Want to develop the skills that prevent commoditization? Amplified Imagination teaches you to maintain distinctly human creativity while using AI as your amplifier, not your replacement. [Learn more →]